Amirabbas Afzali

I’m Amirabbas Afzali, a B.Sc. student in Electrical Engineering with a minor in Mathematics at Sharif University of Technology, specializing in Communication Systems. Last summer, I was a research intern at the MLBio Lab at EPFL, working with Prof. Maria Brbić on Weak-to-Strong generalization for preference alignment in large language models.

I’m broadly interested in reliable decision-making in machine learning systems, including trustworthy ML, optimization, and reinforcement learning, especially where these topics intersect with human-AI alignment.

My current research focuses on understanding how preferences, robustness, and feedback signals shape model behavior. My recent work spans several research areas, including:

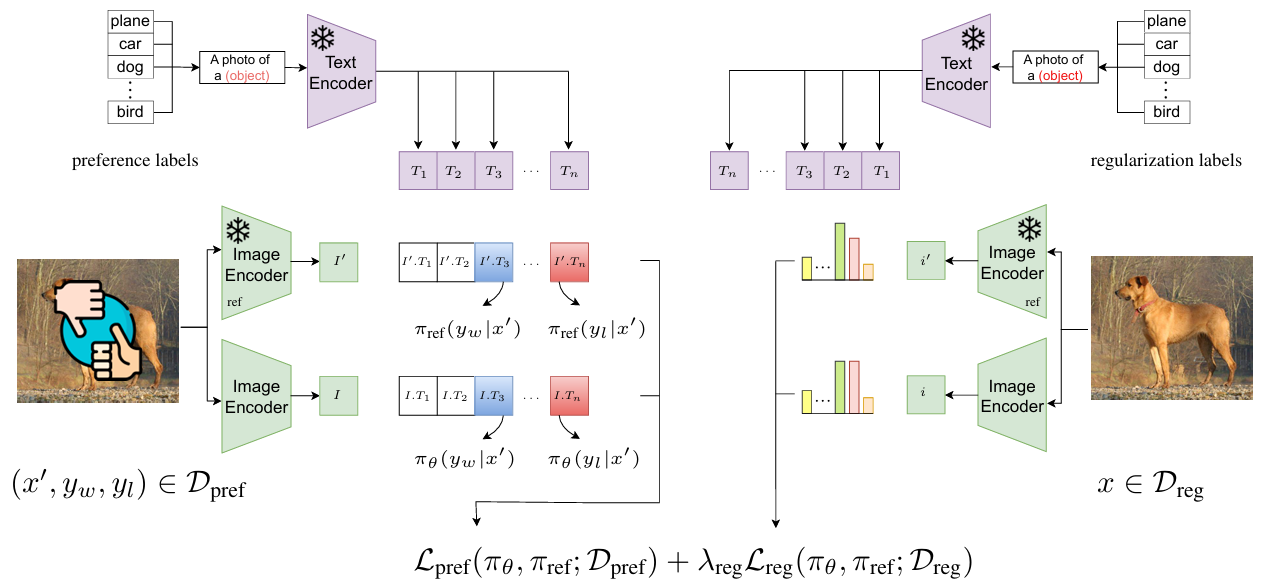

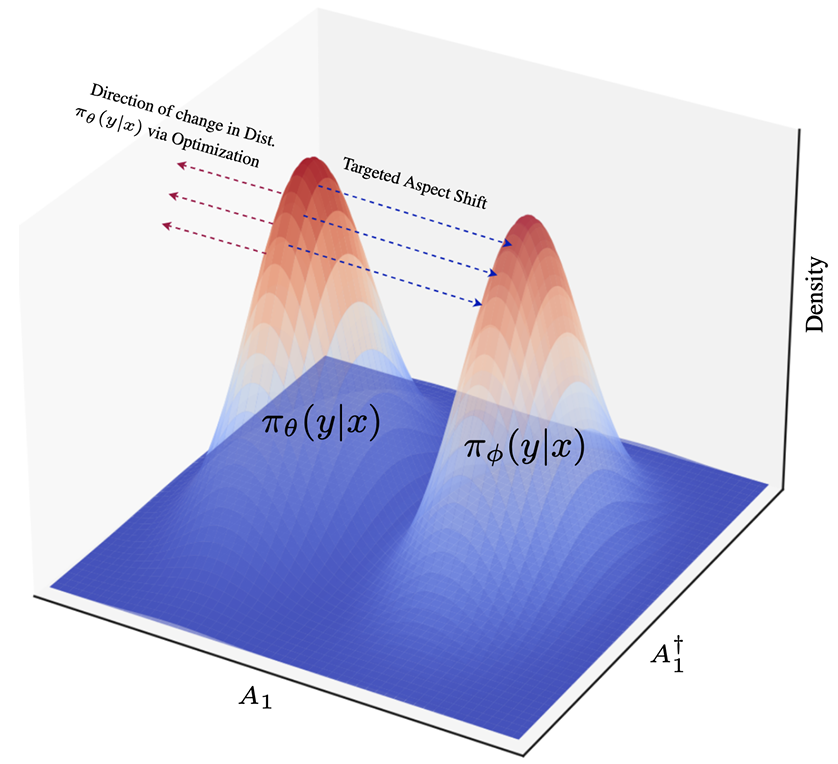

- Post-training techniques for LLMs, such as preference learning and alignment

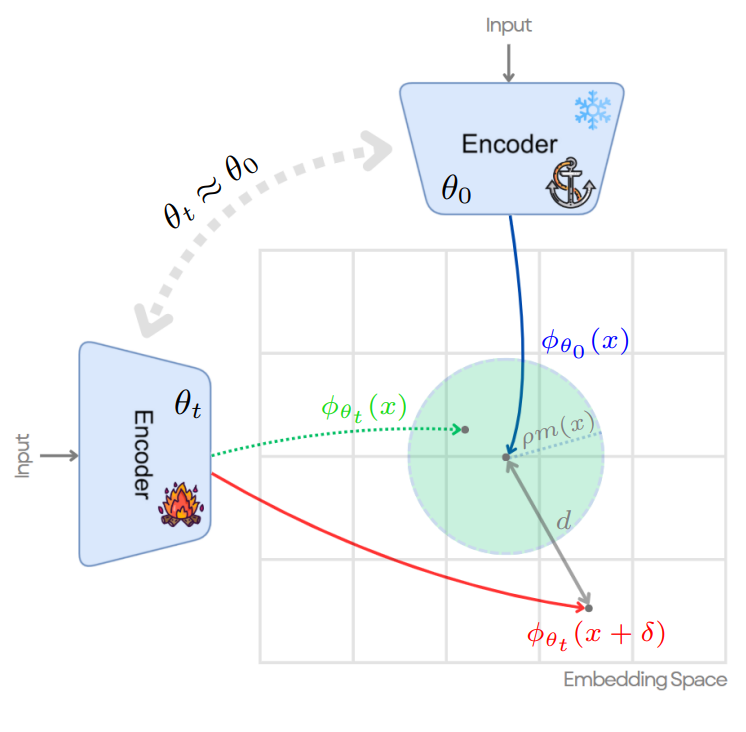

- Trustworthy and robust machine learning, with emphasis on adversarial robustness and safety

- Offline and robust reinforcement learning

Lately, I’ve been especially interested in the following topics — feel free to reach out if they resonate:

(i) LLM safety and adversarial alignment 🔗

(ii) Steering Vector for test-time alignment 🔗

(iii) Certified robustness and model verification 🔗